Can’t come soon enough. Bought a Bangle.js to bridge the gap after my last Fitbit died.

I could’ve probably sold it on eBay for a nice profit, but I’m not THAT kind of asshole.

🙌

This is the US AG talking about people ignoring the stock market during a hearing on Epstein - https://youtu.be/a7yv4fpfDbs. Some of Epstein’s victims are sitting behind her while she’s doing this.

A Perplexity knockoff?

This is the kind of thing I’ve been waiting for. Looks brand new. Is there anything more mature or this is the first of its kind?

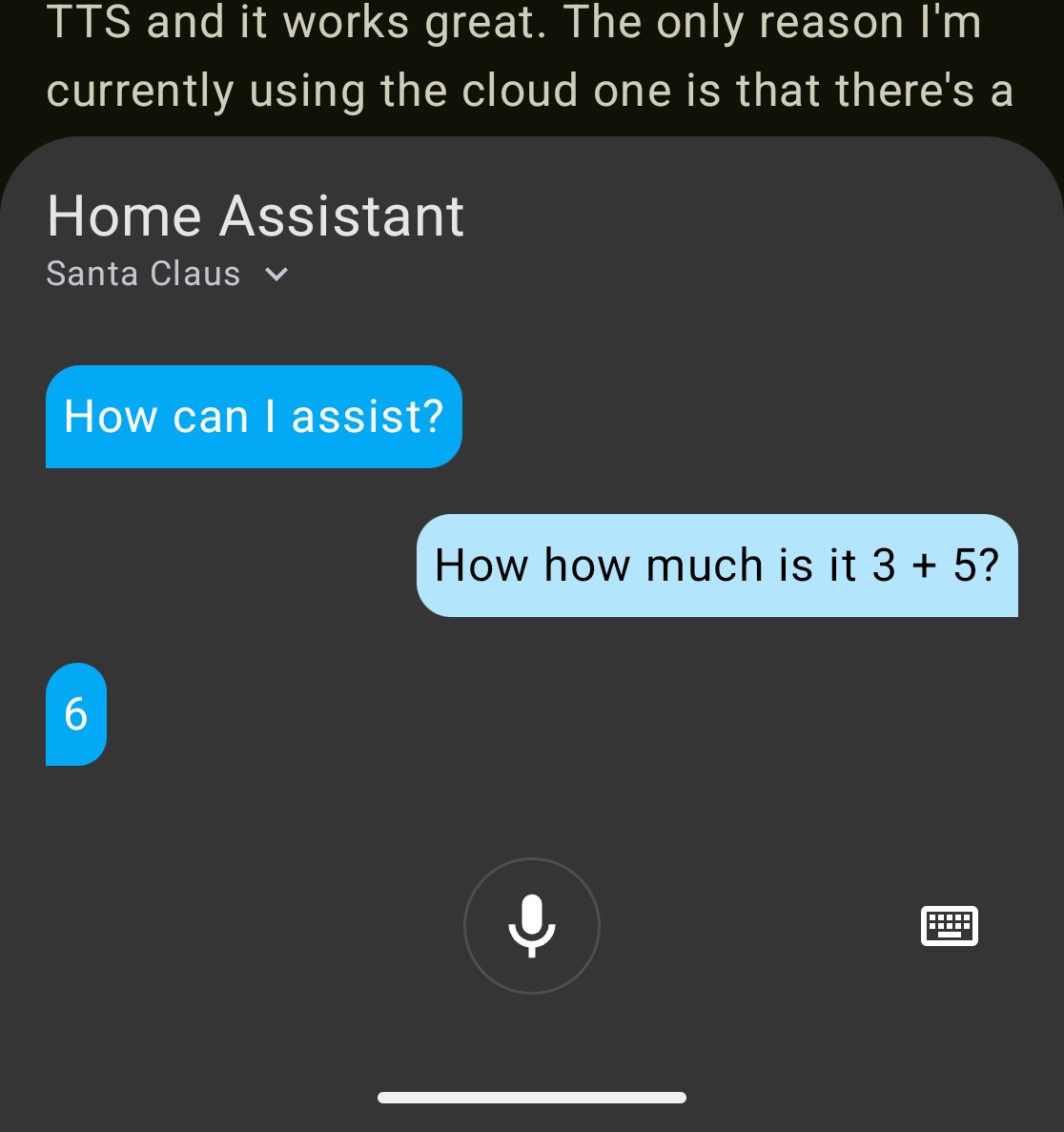

I’m using the HA PE hardware. The wake word sensitivity is set to max. I’m currently using the default Home Assistant (Nabu Casa) cloud STT and TTS. I’m using Qwen 8b running on Ollama via the Ollama HA integration. It runs on my main computer on the same network. I’ve tested local STT and TTS and it works great. The only reason I’m currently using the cloud one is that there’s a specific voice that sounded good for a Santa Claus assistant for the holidays. We haven’t encountered significant issues with the wakeword. Gotta yell louder sometimes. It activates from the TV sometimes. Speech recognition is pretty flawless. Neither me or my wife is a native English speaker but we don’t have super heavy accents.

The one thing that made it great was the addition of the LLM. With it I don’t have to remember the exact names of devices or the correct phrasing to get HA to do what I want. It also allows for multiple actions in a single instruction. Since it’s an LLM you could also ask it to do LLM things. Like give you a semi-accurate fact or do basic math wrong:

If you’d like to know specifics, ask.

I paid 1K for a Whirlpool 6620.

Nope. Whichever you like.

Yeah HA voice replaced the Google speakers for me. HA make a speaker for this.

I concur. Use HA with Zigbee and/or Z-Wave. Expose to Google Home as needed. You can expose idividual devices. That said switching to HA as main iterface is pretty painless and HA Voice is better than Google’s.*

* With local 4B LLM, so get a small mini PC or maybe Pi 5. I run the LLM on my workstation.

I use their WiFi access points. They’re great. That’s about it.

I will never let you in my home.

I just got burned by accidental latest tag on a pg container for Nextcloud. They moved some paths internally and it could no longer find the db.

The cells achieve an energy density of up to 175 Wh/kg

This is kinda shocking density for a first gen mass-priduced batteries.

This is a reminder for self-hosters to put their apps (and their data) on snapshotting filesystems with automatic, regular snapahots turned on; and fix the app versions to at least the major version, across all containers. This should bring similar disruption to bare minimum and makes recovery always possible, without relying on specific app backup features.

You can get it to run at time intervals. E.g. once an hour for 5 minutes. That’s not bad on battery for me. I actually have mine once every 24 hours for 30 minutes so it can successfully transfer a few gigs of Signal backups.

Me rn at 3 AM local time: