This paper looks like it’s essentially run by the US weapons industry. It’s a subsidiary of GovExec who helps military contractors sell to the government. This means they have an interest in drumming up armed conflict and you’d have to take everything they print with this in mind.

A group called Cofacts allows users to forward dubious messages to a chatbot. Human editors check the messages, enter them into a database, and get back to the user with a verdict.

“This year, because gen AI is just so mailable [sic], they just fine-tuned a language module together that can clarify such disinformation…adding back a context and things like that. So we’re no longer outnumbered,” she said.

I’d be highly skeptical of how well this would work. A language model is not a truth machine, it’s just a program that tries to write like a human. There are countless examples of ChatGPT and similar programs saying blatantly false things and making up citations out of thin air.

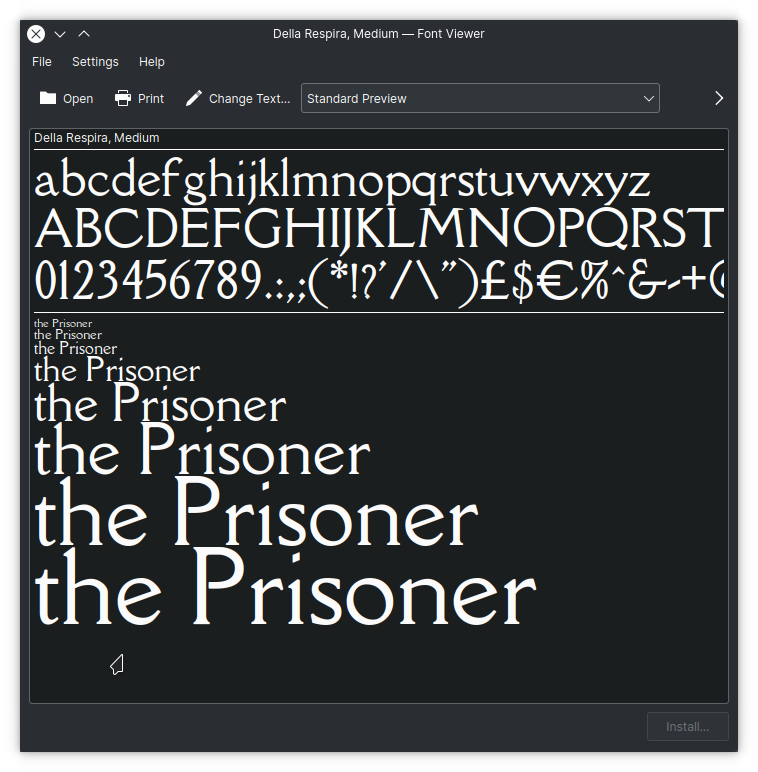

If you want to pay for or pirate a font, there’s of course Albertus. The best free (OFL) font I’ve found with a similar look is Della Respira, only a single-style font:

I’m not sure how you plan on using a proportional font in the terminal, though.